Healthy Volunteer Protocol Upload Process: Difference between revisions

| (7 intermediate revisions by the same user not shown) | |||

| Line 26: | Line 26: | ||

==BIDS format / OpenNeuro== |

==BIDS format / OpenNeuro== |

||

All data is |

All data is converted to BIDS format and uploaded to OpenNeuro as an open access dataset. |

||

Data triggers are cleaned using several routines listed below. These have been used to realign stimulus triggers to optical onset of the projector. Datasets that have logfiles have been merged |

Data triggers are cleaned using several routines listed below. These have been used to realign stimulus triggers to optical onset of the projector. Datasets that have logfiles have been merged |

||

with the trigger data to label triggers and responses. |

with the trigger data to label triggers and responses. |

||

==Processing on Biowulf== |

==Processing on Biowulf== |

||

| Line 50: | Line 49: | ||

Remove history files and replace paths/dates/names in text files |

Remove history files and replace paths/dates/names in text files |

||

==Add data and logfile paths to bashrc== |

|||

in ~/.basrhc |

|||

export hv_meg_path=/$PATH |

|||

export hv_logfile_path=/$PATHlogfile |

|||

==Processing steps in process_hv_data.py== |

==Processing steps in process_hv_data.py== |

||

| Line 57: | Line 61: | ||

Process Tasks and assert ouputs match expected: |

Process Tasks and assert ouputs match expected: |

||

process_airpuff.py |

|||

process_oddball.py |

|||

process_hariri.py |

|||

process_sternberg.py |

|||

sternberg_processing.py |

|||

process_gonogo.py |

|||

Calculate the noise level |

Calculate the noise level |

||

Scrub path info and history text files from the datasets to remove date and identifiers |

Scrub path info and history text files from the datasets to remove date and identifiers |

||

==General Task Analysis Processing== |

==General Task Analysis Processing== |

||

===Invert Trigger=== |

===Invert Trigger=== |

||

During some tasks the trigger randomly inverts. |

During some tasks the trigger randomly inverts. A 10-bin histogram is calculated for the analog trigger channels. The top two bins by count represent the ON and OFF state of the stimuli. The expectation is that the OFF state should represent more time during the task than the ON state, if not the trigger is inverted prior to analysis. |

||

===Threshold Detection=== |

===Threshold Detection=== |

||

Amplitude detection based on normalizing analog signal between 0 and 1 and derivative threshold. Temporary filters may be applied to the data during this operation. |

Amplitude detection based on normalizing analog signal between 0 and 1. The threshold is then applied to the normalized signal and an optional derivative threshold. Temporary filters may be applied to the data during this operation. |

||

===Merge Logfile=== |

===Merge Logfile=== |

||

For psychoPy tasks (Sternberg, Hariri, oddball), the text logfile is imported. The first few timepoints representing instruction screens are removed from the file and the rest are added to the dataframe. |

For psychoPy tasks (Sternberg, Hariri, oddball), the text logfile (.log) is imported. The first few timepoints representing instruction screens are removed from the file and the rest are added to the dataframe. |

||

===Coding Analog stimuli with Parallel port Values=== |

===Coding Analog stimuli with Parallel port Values=== |

||

| Line 81: | Line 84: | ||

===Reset Timing to Optical Trigger=== |

===Reset Timing to Optical Trigger=== |

||

Due to projector frame buffering there can be timing jitter in the presentation of visual stimuli to the subject. The |

Due to projector frame buffering there can be timing jitter in the presentation of visual stimuli to the subject. The stimulus computer sends a parallel port trigger to the acquisition computer and the stimuli to the projector. The visual tasks have been designed to activate the upper right corner with a specific bit value The projector - Propix (model ????) has an output BNC signal that triggers when the top right pixel is set to the value 255 (??). This optical trigger is coded on channel UADC016. |

||

===Response Coding=== |

===Response Coding=== |

||

The correct response is calculated from either the parrallel port trigger or the logfile information depending on the task. The expected responses are judged against the response to determine if a response_hit, response_miss, response_false_alarm, response_correct_rejection. Some tasks require a response for every stimuli (eg hariri_hammer), while others require responses |

|||

The correct response is calculated.... |

|||

==Task Specific Processing== |

==Task Specific Processing== |

||

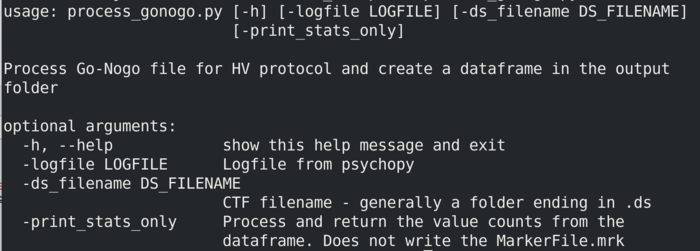

All task processing scripts have a commandline parsing component that can be called independently. The typical format is below. If print_stats_only is flagged, then the results will not be output to the marker file. This can be useful if the QA fails or to check results after a change in the stimulus setup. |

|||

[[File:Process_help.png | 700px]] |

|||

===Oddball Task=== |

===Oddball Task=== |

||

Description: 3 sound stimuli presented to the participant. The participant attends to the "standard" tone stimuli (210 epochs) and is required to respond to the "target" tone stimuli (45 epochs) that are intermixed with the standard tone. Additionally a broadband noise stimuli is presented as a "distractor" stimuli (45 epochs). |

Description: 3 sound stimuli presented to the participant. The participant attends to the "standard" tone stimuli (210 epochs) and is required to respond to the "target" tone stimuli (45 epochs) that are intermixed with the standard tone. Additionally a broadband noise stimuli is presented as a "distractor" stimuli (45 epochs). |

||

| Line 125: | Line 131: | ||

Files have been compiled into a folder hv_proc |

Files have been compiled into a folder hv_proc |

||

This contains script interfaces into the megblocks package |

This contains script interfaces into the megblocks package |

||

=Data Quality Assurance and PreUpload= |

|||

Verify that all tasks have the appropriate number of triggers |

|||

hv_proc/utilities/marker_quality_assurance.py -data_folder $DATA |

|||

Latest revision as of 15:16, 29 May 2020

Code

Requirements and Installation

pyctf General Utilities to interface with CTF data using python. Also provides bids processing utilities. https://megcore.nih.gov/index.php/Pyctf - A more recent update will be coming available soon

hv_proc Python scripts to extract and mark HV specific stimuli and validate trigger/response timing and data QA. **Open Access in development

NIH MEG Bids processing Routines to convert the CTF MEG data into BIDs format using mne_bids and bids_validator https://github.com/nih-fmrif/meg_bids/blob/master/1_mne_bids_extractor.ipynb

mne_bids https://mne.tools/mne-bids/stable/index.html pip install -U mne pip install -U mne-bids

Afni Required for extracting HPI coil locations. https://afni.nimh.nih.gov/pub/dist/doc/htmldoc/background_install/install_instructs/index.html

Bids Validator

https://github.com/bids-standard/bids-validator

BIDS format / OpenNeuro

All data is converted to BIDS format and uploaded to OpenNeuro as an open access dataset. Data triggers are cleaned using several routines listed below. These have been used to realign stimulus triggers to optical onset of the projector. Datasets that have logfiles have been merged with the trigger data to label triggers and responses.

Processing on Biowulf

To process the scripts on biowulf: pyctf & hv_proc must be in your conda path (if necessary add filepaths to a .pth file in the conda site-packages folder) module load ctf module load afni

Processing Steps

Acquire data Copy raw data to Biowulf: $MEG_folder/NIMH_HV/MEG Copy data to bids_staging: $MEG_folder/NIMH_HV/bids_staging

sinteractive --mem=8G --cpus-per-task=4 #Start small sized server conda activate hv_proc module load afni module load ctf #Required for addmarks

process_hv_data -subject_folder $Subject_Folder #Loops over all task processing steps and creates QA documents

Remove history files and replace paths/dates/names in text files

Add data and logfile paths to bashrc

in ~/.basrhc export hv_meg_path=/$PATH export hv_logfile_path=/$PATHlogfile

Processing steps in process_hv_data.py

Loop over the following scripts: CONVERT MRI FIDUCIALS TO TAGs >> get code name pyctf.bids.extract_tags $subjid_anat+orig.BRIK > tagfile

Process Tasks and assert ouputs match expected: process_airpuff.py process_oddball.py process_hariri.py process_sternberg.py process_gonogo.py

Calculate the noise level Scrub path info and history text files from the datasets to remove date and identifiers

General Task Analysis Processing

Invert Trigger

During some tasks the trigger randomly inverts. A 10-bin histogram is calculated for the analog trigger channels. The top two bins by count represent the ON and OFF state of the stimuli. The expectation is that the OFF state should represent more time during the task than the ON state, if not the trigger is inverted prior to analysis.

Threshold Detection

Amplitude detection based on normalizing analog signal between 0 and 1. The threshold is then applied to the normalized signal and an optional derivative threshold. Temporary filters may be applied to the data during this operation.

Merge Logfile

For psychoPy tasks (Sternberg, Hariri, oddball), the text logfile (.log) is imported. The first few timepoints representing instruction screens are removed from the file and the rest are added to the dataframe.

Coding Analog stimuli with Parallel port Values

Analog triggers are generally converted to single states (ON/OFF), the parallel port can be used to code the output values

Reset Timing to Optical Trigger

Due to projector frame buffering there can be timing jitter in the presentation of visual stimuli to the subject. The stimulus computer sends a parallel port trigger to the acquisition computer and the stimuli to the projector. The visual tasks have been designed to activate the upper right corner with a specific bit value The projector - Propix (model ????) has an output BNC signal that triggers when the top right pixel is set to the value 255 (??). This optical trigger is coded on channel UADC016.

Response Coding

The correct response is calculated from either the parrallel port trigger or the logfile information depending on the task. The expected responses are judged against the response to determine if a response_hit, response_miss, response_false_alarm, response_correct_rejection. Some tasks require a response for every stimuli (eg hariri_hammer), while others require responses

Task Specific Processing

All task processing scripts have a commandline parsing component that can be called independently. The typical format is below. If print_stats_only is flagged, then the results will not be output to the marker file. This can be useful if the QA fails or to check results after a change in the stimulus setup.

Oddball Task

Description: 3 sound stimuli presented to the participant. The participant attends to the "standard" tone stimuli (210 epochs) and is required to respond to the "target" tone stimuli (45 epochs) that are intermixed with the standard tone. Additionally a broadband noise stimuli is presented as a "distractor" stimuli (45 epochs).

UADC003 - Left Ear auditory stimuli UADC004 - Right Ear auditory stimuli UADC005 - Participant response UPPT001 - Stimuli Coding (standard:1, target:2, distractor:3)

oddball_processing.py

Hariri Hammer

Emotional processing task contrasting happy and sad faces. Shapes are used as neutral baselines. An initial "top stim" will be shown followed by a fixation crosshair. The subject is supposed to respond during the "choice stim" by pressing the left or right response button that corresponds to the face matching the "top stim" presentation.

UADC006 - Left response UADC007 - Right response UADC016 - Projector onset UPPT001 - Parallel port stimuli

Temporal Coding (UPPT001)

Top Stim Choice Stim Response Value

Stimulus Trigger codes (UPPT001)

diamond,0x1 moon,0x2 oval,0x3 plus,0x4 rectangle,0x5 trapezoid,0x6 triangle,0x7 hapmale,0xB hapfem,0xC sadmale,0x15 sadfem,0x16

Processing Scripts:

Files have been compiled into a folder hv_proc This contains script interfaces into the megblocks package

Data Quality Assurance and PreUpload

Verify that all tasks have the appropriate number of triggers hv_proc/utilities/marker_quality_assurance.py -data_folder $DATA